Meaningful dimensionality reduction

An extension to the autoencoder architecture for ML anomaly detection. This post introduces the Variational Auto-Encoders (VAEs), a better version of the original autoencoders that applies regularization in the encoder-decoder steps to obtain more meaningful embeddings from the input data.

Following our previous post on the PHOENIX Blog, “Autoencoders for anomaly detection” [1] from March 2021, this one expands the idea of autoencoders describing a more resourceful variant: the Variational Auto-Encoders (VAEs). Recapping from the previous post, autoencoders are Machine Learning models capable of reconstructing input data. They do so by performing the two following steps:

- Encode: reduce the dimensionality by aggregating data into “features”, numbers that represent inherent characteristics of the input data. By encoding the model reduces the input data to an array of features or “embeddings”, which are lists containing numbers that represent the totality of the input data.

- Decode: from the “feature map” (also called “feature array” or “embeddings”) the autoencoder model would try to use deconvolution to extrapolate and infer the input data at the best of its capacity.

As it is explained in that blog entry [1], the autoencoders can be used for anomaly detection. Although the “regular” autoencoders can reconstruct the input data accurately (i.e., with low information loss), they are usually trained without any structurization of the encoded space. This means that the model has no mathematic tools to ensure the preservation of structure information of the reduced representations of the input data. The main drawback of this approach is that many features in the encoded space could be meaningless, leading to overfitting in the training process. To avoid meaningless content after decompression of the embeddings, the model would need regularization: the presence of exploitable and interpretable structures in the latent space. Adding regularization to the compression step makes it not only to reduce the number of input data points, but to do it while keeping the structure of the information in the reduced representations.

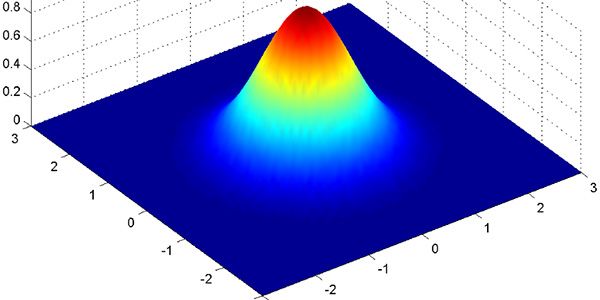

The Variational Auto-Encoders are a variant of regular autoencoders that apply regularization to the encoding step of the reconstruction process. Instead of encoding a single data point, a VAE encodes the input data with a two-step process: first it encodes the data point which is encoded as a probability distribution over the latent space; second, the actual feature of the reduced representation is sampled from the encoded distribution. The encoded distributions are forced to resemble a normal distribution, giving the latent representations these two characteristics:

- Continuity: proximity in the latent space would mean proximity in the original space.

- Completeness: the points sampled from a distribution would be relatable to others in the same distribution, so they would be meaningful features once decoded.

This approach also enables the use of Variational Bayesian Statistical methods, being the regularization term expressed as the Kullback-Leibler divergence between the latent distribution and a normal distribution. Although the KL divergence is used as a distance metric, the method will not be minimizing it directly. Instead, the mathematical loss function for training VAEs would be the Evidence Lower Bound (ELBO), a mathematical variable that is closely related with the Kullback-Leibler dissimilarity. Maximizing the ELBO would lead to a minimum KL divergence, allowing it to be a good metric for training Machine Learning models.

At the PHOENIX project we are combining the use of Variational Auto-Encoders with Recurrent Neural Networks, particularly Long Short-Term Memory units (or LSTM) for anomaly detection in time series data. Find out more about VAE-LSTM models in this paper from Lin et al. [2].

References

| [1] | “Autoencoders for anomaly detection,” PHOENIX, 18 March 2021. [Online]. Available: https://phoenix-h2020.eu/autoencoders-for-anomaly-detection/. [Accessed 06 August 2021]. |

| [2] | S. Lin, R. Clark, R. Birke, S. Schönborn, N. Trigoni and S. Roberts, “Anomaly Detection for Time Series Using VAE-LSTM Hybrid Model,” in ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing, 2020. |